Multimodal learning

|

Read other articles:

Koordinat: 49°36′59″N 06°08′20″E / 49.61639°N 6.13889°E / 49.61639; 6.13889 Rekosntruksi Benteng Thüngen merumahi Musée Dräi Eechelen. Benteng Thüngen adalah sebuah benteng bersejarah di Kota Luksemburg yang berada di wilayah selatan Luksemburg. Lokasi Benteng Thüngen berada di Taman Dräi Eechelen, kawasan Kirchberg, timur laut kota tersebut. Referensi Fort Thüngen (dalam bahasa Prancis). Service des sites et monuments nationaux. Diarsipkan dari vers...

Paulina Haning-Bullu Paulina Haning-Bullu (lahir 10 April 1956)[1] adalah seorang politikus Indonesia. Paulina Haning-Bullu adalah salah satu perempuan pertama di Nusa Tenggara Timur melalui proses panjang akhirnya dilantik sebagai Bupati Rote Ndao periode 2019–2024 di Aula Fernandes Kantor Gubernur NTT, pada Kamis, 14 Februari 2019. Paulina Haning-Bullu adalah istri dari mantan Bupati Rote Ndao dua periode, Leonard Haning. Dengan dilantiknya Paulina Haning Bullu menjadi Bupati Rote...

Cruise ship built in 2002 Carnival Pride Carnival Pride in Kiel, 2023 History Panama NameCarnival Pride Owner Carnival Corporation & plc OperatorCarnival Cruise Lines Port of registryPanama City, Panama Builder Kvaerner Masa-Yards Helsinki New Shipyard Helsinki, Finland CostUS $375 million Yard number500 Laid downMarch 30, 2000 LaunchedMarch 29, 2001 Sponsored byTamara Jernigan ChristenedJanuary 7, 2002 CompletedDecember 12, 2001 In service2002–present Identification Call sig...

Pour les articles homonymes, voir Bile (homonymie). Cet article est une ébauche concernant la biologie. Vous pouvez partager vos connaissances en l’améliorant (comment ?) selon les recommandations des projets correspondants. Le cycle entéro-hépatique de la bile ou cycle biliaire de Schiff La bile[1] est un liquide biologique jaune-verdâtre, légèrement basique (pH compris entre 7,6 et 8,6[2]) qui favorise la digestion, plus spécifiquement celle des lipides. Elle est sécrétée...

Clarence Seedorf Informasi pribadiNama lengkap Clarence Clyde Seedorf[1]Tanggal lahir 1 April 1976 (umur 48)Tempat lahir Paramaribo, Suriname[2]Tinggi 176 cm (5 ft 9 in)Posisi bermain GelandangKarier senior*Tahun Tim Tampil (Gol)1992–1995 Ajax 65 (11)1995–1996 Sampdoria 32 (3)1996–1999 Real Madrid 121 (15)1999–2002 Inter Milan 92 (14)2002–2012 AC Milan 300 (47)2012–2014 Botafogo 72 (23)Total 640 (100)Tim nasional1994–2008 Belanda 87 (11)Kepel...

North-south avenue in Manhattan, New York Template:Attached KML/Seventh Avenue (Manhattan)KML is from Wikidata Seventh AvenueSeventh Avenue South (south of 11th St)Fashion Avenue (26th–42nd Sts)Adam Clayton Powell Jr. Boulevard (north of 110th St)Seventh Avenue heading north to Greenwich Village and Central ParkNamesakeGarment District and Adam Clayton Powell Jr.OwnerCity of New YorkMaintained byNYCDOTLength5.3 mi (8.5 km)[1][2]LocationManhattan, New York CitySouth...

† Человек прямоходящий Научная классификация Домен:ЭукариотыЦарство:ЖивотныеПодцарство:ЭуметазоиБез ранга:Двусторонне-симметричныеБез ранга:ВторичноротыеТип:ХордовыеПодтип:ПозвоночныеИнфратип:ЧелюстноротыеНадкласс:ЧетвероногиеКлада:АмниотыКлада:Синапсиды�...

Offensive play in basketball For other uses, see Alley Oop (disambiguation). This article needs additional citations for verification. Please help improve this article by adding citations to reliable sources. Unsourced material may be challenged and removed.Find sources: Alley-oop – news · newspapers · books · scholar · JSTOR (January 2010) (Learn how and when to remove this template message) Trey Burke sets up an alley-oop to Glenn Robinson III for Mi...

آلة السدس بحار يقوم بقياس ارتفاع الشمس بالسدس. السدس (الجمع: السُدُسَات) هو آلة فلكية قديمة كانت تستخدم لقياس الزاوية بين جسمين أو نجمين، والتي اخترعها أبو محمود الخجندي في القرن العاشر. تستخدم آلة السدس في الأساس لتحديد الزاوية بين جرم سماوي والأفق الذي يعرف باسم الارتفاع....

British military award AwardConspicuous Gallantry CrossObverse of the medal. Ribbon: 32 mm, white with blue edges and a red central stripeTypeMilitary decorationAwarded for... an act or acts of conspicuous gallantry during active operations against the enemy.[1]Description36 mm max. width; silver cross patée imposed on a wreath of laurel, with the Royal Crown in a circular panel in the centre. Suspended by a ring from a plain suspension bar.CountryUnited Kingdom of Great Britain...

هنودمعلومات عامةنسبة التسمية الهند التعداد الكليالتعداد قرابة 1.21 مليار[1][2]تعداد الهند عام 2011ق. 1.32 مليار[3]تقديرات عام 2017ق. 30.8 مليون[4]مناطق الوجود المميزةبلد الأصل الهند البلد الهند الهند نيبال 4,000,000[5] الولايات المتحدة 3,982,398[6] الإمار...

2020 studio album by Sébastien TellierDomesticatedStudio album by Sébastien TellierReleased29 May 2020 (2020-05-29)Length32:03LabelRecord MakersProducernitJam CityMind GamersPhilippe ZdarVarnish La PiscineSébastien Tellier chronology L'Aventura(2014) Domesticated(2020) Singles from Domesticated A BalletReleased: 29 January 2020[1] Domestic TasksReleased: 8 April 2020[2] Stuck in a Summer LoveReleased: 19 May 2020[3] Professional ratingsAggreg...

نادي تريفيزو تأسس عام 1909، و1993، و2009، و2013، و2019 البلد إيطاليا المدرب لويجي راديشي (1 يوليو 1968–30 يونيو 1969) الموقع الرسمي الموقع الرسمي تعديل مصدري - تعديل نادي تريفيزو (بالإيطالية: F.C.[1] Treviso)، نادي كرة قدم إيطالي يقع في مدينة تريفيزو في إيطالي�...

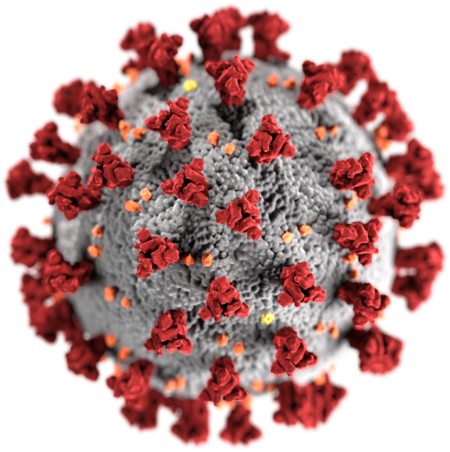

لجائحة فيروس كورونا تأثير كبير على صناعة الموسيقى خلال انتشارها في عام 2019-2020، وعلى جميع مجالات الفن حيث قد أُلغيت أو أُجلت العديد من الحفلات الموسيقية والمهرجانات الموسيقية، وجولات الحفلات الموسيقية حول العالم، وحفلات تقديم الجوائز. بالرغم من أن هذه الجائحة قامت بمنح ال�...

Her Majesty's Government of Western AustraliaCoat of arms of Western Australiaمعلومات عامةالبلد Australiaالاختصاص أستراليا الغربية نظام الحكم State Governmentالهيئات الفرعية Department of Commerce (en) [1]Tourism Western Australia (en) Head of state (sovereign) Monarch (Queen)رئيس الحكومة Premierالتكوين 21-10-1890المدة 133 سنةً و7 أشهرٍ ويومًا واحدًاالمقر الرئ...

Монгольская народная партиямонг. Монгол Ардын НамМНП / МАН Лидер Лувсаннамсрайн Оюун-Эрдэнэ Основана 25 июня 1920 Штаб-квартира Монголия, Улан-Батор, пр-т Молодёжи, 14191 Страна Монголия Идеология В настоящее время:Социал-демократияДемократический социализмЛевый национа�...

2016年夏季奥林匹克运动会汤加代表團汤加国旗IOC編碼TGANOC湯加體育與國家奧林匹克委員會網站oceaniasport.com/index_id_73.html(英文)2016年夏季奥林匹克运动会(里約熱內盧)2016年8月5日至8月21日運動員7參賽項目4个大项旗手开幕式:皮塔·陶法托夫瓦(跆拳道)[1]闭幕式:Siueni Filimone(田径)[2]历届奥林匹克运动会参赛记录(总结)夏季奥林匹克运动会198419881992199620...

Ilustrasi Jendral Yue Fei Wikimedia Commons memiliki media mengenai Yue Fei. Yue Fei adalah jendral terkenal dari Dinasti Song.[1][2] Ia adalah jendral utama dalam pengembalian daerah yang direbut Dinasti Jin di bawah Kaisar Song Gaozong.[1][2] Kisah asal mula penganan Cahkwe terkait dengan kematiannya.[1][2] Yue Fei difitnah oleh pejabat kerajaan Qin Hui yang menyebabkan dirinya dihukum oleh Kaisar Song Gaozong.[1][2] Hal ini me...

Rank in the Swedish Navy CaptainkommendörFlag of the captain, Swedish Navy.Shoulder mark of a Swedish captain.Sleeve insignia of a Swedish captain.Country SwedenService branchSwedish NavyAbbreviationKmd (Swedish),[1] Capt (N) (English)[2]RankCaptainNATO rank codeOF-05Non-NATO rankO-6Formation1600sNext higher rankRear admiral (lower half) (2000–)Senior captain (1972–2000)Rear admiral (–1972)Next lower rankCommanderEquivalent ranksColonel Kommendör, abbreviated kmd ...

1992 musical with songs by George and Ira Gershwin Crazy for YouOriginal cast recordingMusicGeorge GershwinLyricsIra GershwinBookKen LudwigBasisGirl Crazy by George Gershwin Ira Gershwin Guy Bolton John McGowanProductions1992 Broadway1993 West End2011 West End revival2022 Chichester Festival Theatre2023 West End revivalAwardsTony Award for Best MusicalLaurence Olivier Award for Best New MusicalLaurence Olivier Award for Best Musical Revival Crazy for You is a romantic comedy musical with a bo...